The Singularity Could Be Closer Than You Think

A.I. evolution may be decades away -- or happening already.

Sci-fi depictions of the future would have us thinking of the singularity — when A.I surpasses human intelligence — as either a technological messiah or the apocalypse. Experts in the field hold varying opinions about just how good or bad, gradual or sudden it will be, but most agree that it will happen in the next hundred years and probably sooner.

Futurists like Ray Kurzweil, a computer scientist and Google director of engineering, are proponents of a “hard singularity,” the kind that will happen at a particular date in time.

“We’re going to get more neocortex, we’re going to be funnier, we’re going to be better at music,” Kurzweil said earlier this year at SXSW in Austin, Texas. “We’re going to be sexier. We’re really going to exemplify all the things that we value in humans to a greater degree.”

Kurzweil has made 147 predictions since the Nineties about when the singularity will occur, his most recent being 2045. He’d previously predicted that artificial intelligence would achieve human levels of intelligence by 2029. Now he’s set 2045 as the year when the singularity will happen, at which time he says “we will multiply our effective intelligence a billionfold by merging with the intelligence we have created.”

Kurzweil upholds a positive take on the singularity, championing the event as a way to overcome age-old human problems and magnify human intelligence, as he details in his 2005 book The Singularity is Near. “[The singularity] will result from the merger of the vast knowledge embedded in our own brains with the vastly greater capacity, speed, and knowledge-sharing ability of our technology,” he writes. “The fifth epoch [when the singularity happens] will enable our human-machine civilization to transcend the human brain’s limitations of a mere hundred trillion extremely show connections.”

Meanwhile, Masayoshi Son, CEO of Softbank Robotics, argues that the singularity will happen by 2047.

“One of the chips in our shoes in the next 30 years will be smarter than our brain. We will be less than our shoes. And we are stepping on them,” Son said earlier this year. “I think this super intelligence is going to be our partner. If we misuse it, it’s a risk. If we use it in good spirits it will be our partner for a better life.”

But how do we define the singularity? To Damien Scott, a graduate student at Stanford University in the schools of business and energy and earth sciences, it’s when we can no longer predict AI’s motives.

“For some people, the singularity is the moment in which we have an artificial super intelligence which has all the capabilities of the human mind, and is slightly better than the human mind,” Scott tells Inverse. “But I think that is a big ask.” That argument predicts that not only will the singularity software or other platform be smarter than people, but that it also will have the ability to improve itself, he explains. “When you get into territory when the system is smarter than the smartest human, and you can’t comprehend what it will do or what its objective is.”

That’s the more classical version of the singularity, Scott says, the hard take on a particular moment that may never actually occur all at once. But then, there’s another, softer take on the singularity, which seems to be more widely accepted.

“We’ll start to see narrow artificial intelligence domains that keep getting better than the best human,” Scott says. We already have calculators that can outperform any person, and within two to three years, the world’s best radiologist will be computer systems, he says. Rather than an all-encompassing generalized intelligence that’s better than humans at every single thing, Scott says the singularity will occur — and is occurring — piecemeal, across various fields of artificial intelligence.

“Will it be self-aware or self-improving? Not necessarily,” he says. “But that might be the kind of creep of the singularity across a whole bunch of different domains: All these things getting better and better, as an overall a set of services that collectively surpass our collective human capabilities.”

Is the singularity already happening? Case in point: A.I. beats humanity at go.

The gradual singularity is the kind Scott and others interviewed for this article predict happening, rather than a momentous event on a random afternoon 28 years from now.

The singularity is already happening, according to Aaron Frank, principal faculty at the Singularity University, a technology learning center. “It’s a way of describing this rapid pace of change that the world is now experiencing,” he says. “The term singularity that I subscribe to is a term borrowed from physics to describe an environment in which no longer really understand much of what’s happening around us. For example, the event horizon of a black hole is referred to as a singularity because the laws of physics don’t apply there.”

You’ll see signatures of the singularity whenever you’re surprised by certain technological events, Frank says. From image recognition to artificial chess or poker players outperforming humans, it’s not always quite clear why technology has come to function better than those who created it, even though people mostly know what to expect from it. “But if you look inside these algorithms, they’re already far more complex than any one human can truly understand,” he says. “We used to think technology gave us total control and knowledge on what’s happening, but now we no longer understand what’s happening.”

It’s an exchange, Frank says: At the expense of total understanding, people are freed up to manage other tasks while A.I. takes care of the rest. “If we can turn over the diagnosing of cancer to a machine learning algorithm, that frees up doctors to do other things like providing care to a patient,” he says.

Echoing Frank’s perspective on the singularity, Ed Hesse, CEO at GridSingularity, a blockchain technology and smart contract developer, points to intelligence like Ethereum, a decentralized platform related to bitcoin that’s run on a custom-made blockchain for smart contracts. “It’s a starting point,” he says, another present day manifestation of the singularity. “Once you have decentralized programs to facilitate all types of transactions and eradicate the middle man, the next level is A.I. and machine learning. Everything becomes one.”

A platform like Ethereum can be used to find all kinds of patterns for specific purposes that people wouldn’t have been able to see before, or on their own, Hesse explains. Open, yet secure, Ethereum elucidates generalized information that otherwise wouldn’t have been made available.

“At the moment, [because] A.I. is very domain specific, it’s not generally smart in any way, but can learn to do something particularly well,” says Dr. Mark Sagar, CEO and founder of Soul Machines, an artificial intelligence company that creates emotionally responsive avatars. “The way it holistically comes together into something that can actually control itself and be autonomous takes many different components. It makes sense that could happen by 2048.”

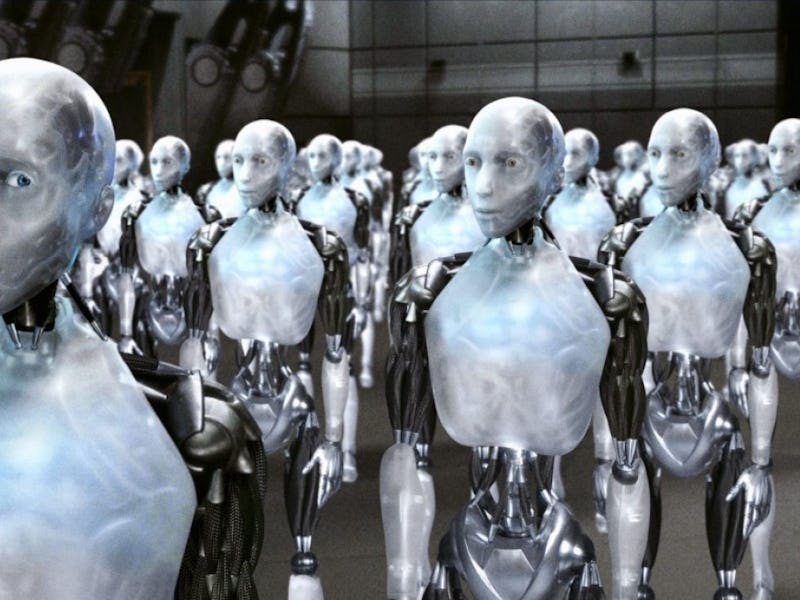

Robots are everywhere in Japan -- a glimpse of the future?

So in the event of the singularity, be it sudden or gradual, will the hallmarks of human intelligence be preserved, in spite of A.I. that’s smarter than us? A platform like Ethereum can be used to find all kinds of patterns for specific purposes that people wouldn’t have been able to see before, or on their own, Hesse explains. Open, yet secure, Ethereum elucidates generalized information that otherwise wouldn’t have been made available.

“A lot of philosophers describe the essence of humanity as being able to feel emotions,” says Scott. “There’s a difference between experiencing emotions and understanding emotions.” A machine might be able to understand emotions, based on indicators like facial recognition or language, but its inability to feel “cognizant empathy” would separate purely artificial intelligence from that which is human, he says.

“There is a lot that we don’t know about how the brain works, for example, so there’s a lot that still has to be discovered and determined as keys to realizing the proper A.I.,” says Sagar. He foresees the development of a “digital biology,” or a hybrid of biological and digital systems. “Take Google for example. It’s got better knowledge than any person on the planet — general knowledge — but no practical knowledge about dealing with the situation in front of you that would emerge.”

Hence, humanizing A.I. is one of the key components to a true singularity, Sagar suggests. “Having computers that are capable of embodied cognition and social learning will be very important to the socialization of machines,” he says. “Think about things like cooperation being the greatest force in human history. For us to cooperate with machines is one level, then for machines to cooperate with machines is another. It may well be that humans are always in there.”

For machines to constructively cooperate with humans and other machines, humans would have to engineer an element of creativity into A.I., Sagar says. And it’s possible, he adds: “Where we start seeing machines create new ideas, the machines will be inventing new things.”

One of the biggest obstacles here will remain human suspicion about smart computers, drawn from decades of dystopian fantasies. Yet Sagar says we’re already seeing breakthroughs in places like Japan, where home robots are part of people’s quotidian routine. Those machines are often anthropomorphized and socially integrated, with transparent capabilities and intentions that people accept gladly. That’s a hint of what the singularity can bring, he says:

“I think it will be an unprecedented time of cooperation and creativity.”