The Justice League of artificial intelligence is finally here.

Nearly every major tech company with a large investment in AI—namely Facebook, Amazon, IBM, Microsoft, and Google—has joined to create a non-profit organization to educate the public about the technology and share industry best-practices on transparency and ethics. It’s named the Partnership on Artificial Intelligence to Benefit People and Society (also acceptably called the Partnership on AI or PAI).

“We recognize we have to take the field forward in a thoughtful and positive and implicitly ethical way,” said Mustafa Suleyman, co-chair of the Partnership on AI and co-founder of Google DeepMind, in a call with journalists before the announcement today (Sept. 28). “The positive impact of AI will depend not only on the quality of our algorithms, but on the level of public engagement, on transparency, and ethical discussion that takes place around it.”

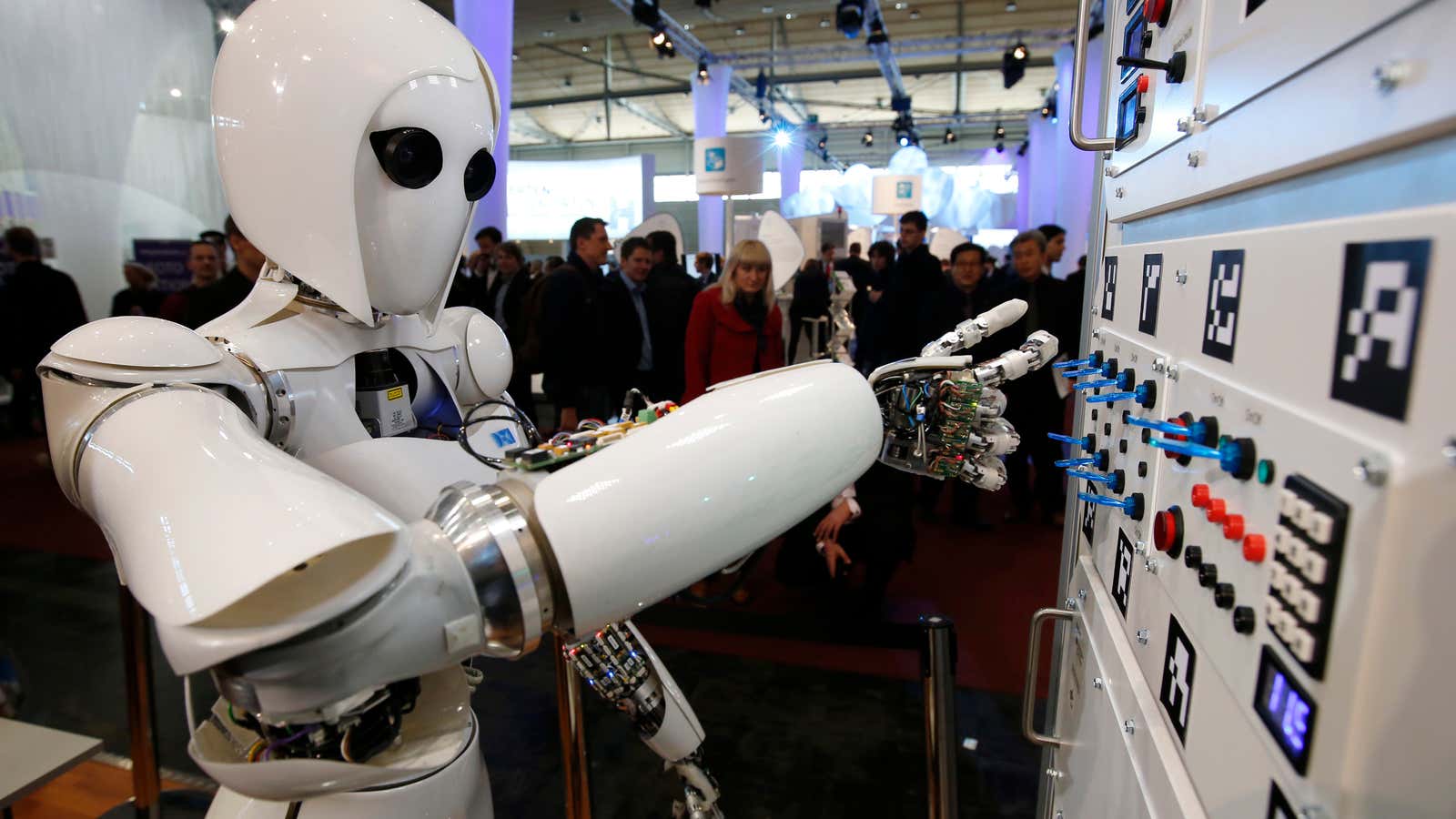

The partnership is built upon the notion that AI is a daunting, scary invention that the general public doesn’t necessarily trust or understand. By banding together, these tech companies are creating a united front—a show of good faith amongst competitors—to promise that this tectonic shift in computing won’t be at the disadvantage to the world. Common fears that the group wants to head off are that AI will eliminate entire industries that employ humans, create disparate amounts of wealth for only those in control of AI, or accidentally spawn super-intelligence that seeks world domination. It hopes to include academics, non-profits, and independent researchers to bolster their research in coming months.

“This is urgent and important work. This is work that supercedes the competitive concerns of the companies [in the partnership],” said Francesca Rossi, an AI ethics researcher at IBM Research.

While a large part of the group’s mission is to assuage fears, the partnership also creates a formal framework for sharing ideas about transparency and ethics between organizations. Eric Horvitz, PAI co-chair and managing director of Microsoft Research, says they’ve had conversations about open-sourcing data used to train AI, making it easier for everyone to build machines that avoid hidden biases, as well raising awareness about the manner in which data is used.

What the organization will actually produce or what role it would have if a member violated the group’s ethics is still unclear. In documents outlining the partnership’s tenets, the group was partially built to oppose development and use of AI that would “violate human rights.” However, Suleyman and Horvitz said in the call with journalists that the organization isn’t regulatory, and would not enforce sanctions on a company that violated the group’s ethics. So rather than a self-governing body, it is merely a forum to showcase best practices and share information.

The organization’s goals are objectively noble: to create standards for transparency, algorithmic accountability, fairness, and ethics, while teaching everyone from users to the US government how the technology works. Member companies say they’ll conduct and publish open research on these topics, and contribute financial support to further study. The frequency and content of these studies have not been solidified, and the organization isn’t launching with any initial studies or findings. Their tenets are as follows:

We will seek to ensure that AI technologies benefit and empower as many people as possible.

We will educate and listen to the public and actively engage stakeholders to seek their feedback on our focus, inform them of our work, and address their questions.

We are committed to open research and dialog on the ethical, social, economic, and legal implications of AI.

We believe that AI research and development efforts need to be actively engaged with and accountable to a broad range of stakeholders.

We will engage with and have representation from stakeholders in the business community to help ensure that domain-specific concerns and opportunities are understood and addressed.

We will work to maximize the benefits and address the potential challenges of AI technologies, by:

Working to protect the privacy and security of individuals.

Striving to understand and respect the interests of all parties that may be impacted by AI advances.

Working to ensure that AI research and engineering communities remain socially responsible, sensitive and engaged directly with the potential influences of AI technologies on wider society.

Ensuring that AI research and technology is robust, reliable, trustworthy, and operates within secure constraints.

Opposing development and use of AI technologies that would violate international conventions or human rights, and promoting safeguards and technologies that do no harm.

We believe that it is important for the operation of AI systems to be understandable and interpretable by people, for purposes of explaining the technology.

We strive to create a culture of cooperation, trust, and openness among AI scientists and engineers to help us all better achieve these goals.

Company-led coalitions around technology are all the rage right now. Ford, Google, Lyft, Uber, and Volvo made a similar organization earlier this year called the Self-Driving Coalition for Safer Streets, which lobbies lawmakers and provides education to the public on autonomous transportation. DJI, 3D Robotics, Parrot, and GoPro also built a coalition for drone-makers earlier this year. The Partnership for AI has stated that it will not lobby governments, but instead broadly provide outreach and research on the technology to a wide swath of interested parties (including governments).