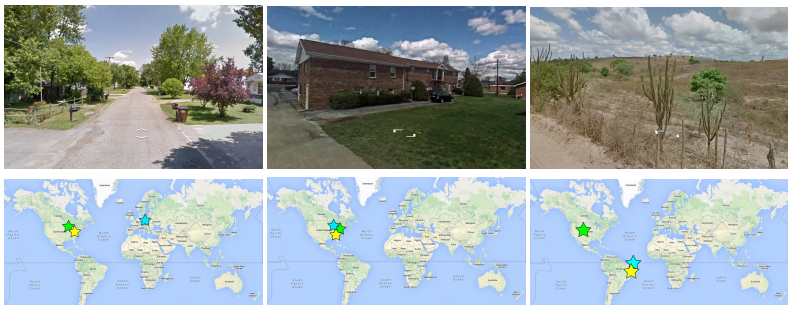

Google's latest deep-learning program can figure out where a photo was taken just by looking at it. The program, called PlaNet, was trained to recognize locations based on details in a photo by looking at over 90 million geotagged images taken from the internet. That means, for instance, that PlaNet can determine a particular photo was taken in Paris because it recognizes the Eiffel Tower. But most humans can do that too. What sets PlaNet apart is that it can use its deep-learning techniques to determine the locations of photos without distinctive landmarks, like the random roads and houses below.

The team behind PlaNet, which was led by software engineer Tobias Weyand, tested the program by taking 2.3 million geotagged images culled from Flickr and feeding them through PlaNet without giving the program access to the geotag data. PlaNet was able to correctly guess the location of images with street-level accuracy 3.6 percent of the time, with city-level accuracy 10.1 percent of the time, with country-level accuracy 28.4 percent of the time and with continent accuracy 48.0 percent of the time. If you aren't impressed, take a shot a doing this yourself with Geoguessr, a game that has you guess the location of images pulled from Google Maps. Tougher than it seems, right?

Weyand and his colleagues then pitted PlaNet against 10 "well-traveled humans." The program faired considerably better than its flesh-and-blood opponents, winning 28 out 50 games played. While the lead isn't enormous, one more finding underscores the impressive feat this machine accomplished. PlaNet was able to perform at about half the localization error that humans did, meaning while it might not have crushed its human opponents with game wins, its ability to guess close to the location of a given picture was roughly twice as good as people. Weyand told the MIT Technology Review that PlaNet had an upper hand on its human counterparts because of the vastly larger number of locations its been able to "visit" by scanning images.

There was no indication from the researchers how Google plans to implement PlaNet going forward. The search giant's deep-learning systems recently bested a human champion at Go. Perhaps Carmen Sandiego is next?

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/15705338/Landscape_of_a_winding_gravel_road_on_the_picturesque_mountain_plateau.0.0.1456422739.jpg)