“Is data the new oil?” asked proponents of big data back in 2012 in Forbes magazine. By 2016, and the rise of big data’s turbo-powered cousin deep learning, we had become more certain: “Data is the new oil,” stated Fortune.

Amazon’s Neil Lawrence has a slightly different analogy: Data, he says, is coal. Not coal today, though, but coal in the early days of the 18th century, when Thomas Newcomen invented the steam engine. A Devonian ironmonger, Newcomen built his device to pump water out of the south west’s prolific tin mines.

The problem, as Lawrence told the Re-Work conference on Deep Learning in London, was that the pump was rather more useful to those who had a lot of coal than those who didn’t: it was good, but not good enough to buy coal in to run it. That was so true that the first of Newcomen’s steam engines wasn’t built in a tin mine, but in coal works near Dudley.

So why is data coal? The problem is similar: there are a lot of Newcomens in the world of deep learning. Startups like London’s Magic Pony and SwiftKey are coming up with revolutionary new ways to train machines to do impressive feats of cognition, from reconstructing facial data from grainy images to learning the writing style of an individual user to better predict which word they are going to type in a sentence.

And yet, like Newcomen, their innovations are so much more useful to the people who actually have copious amounts of raw material to work from. And so Magic Pony is acquired by Twitter, SwiftKey is acquired by Microsoft – and Lawrence himself gets hired by Amazon from the University of Sheffield, where he was based until three weeks ago.

But there is a coda to the story: 69 years later, James Watt made a nice tweak to the Newcomen steam engine, adding a condenser to the design. That change, Lawrence said, “made the steam engine much more efficient, and that’s what triggered the industrial revolution”.

Whether data is oil or coal, then, there’s another way the analogy holds up: a lot of work is going into trying to make sure we can do more, with less. It’s not as impressive as teaching a computer to play Go or Pac-Man better than any human alive, but “data efficiency” is a crucial step if deep learning is going to move away from simply gobbling up oodles of data and spitting out the best correlations possible.

“If you look at all the areas where deep learning is successful, they’re all areas where there’s lots of data,” points out Lawrence. That’s great if you want to categorise images of cats, but less helpful if you want to use deep learning to diagnose rare illnesses. “It’s generally considered unethical to force people to become sick in order to acquire data.”

Machines remain stupid

The problem is that for all the successes of organisations like Google’s AI research organisation Deep Mind, computers are still pretty awful at actually learning. I can show you a picture of an animal you’ve never seen before in your life – maybe a Quokka? – and that one image would provide you with enough information to correctly identify a completely different Quokka in a totally separate picture. Show the first image of a Quokka to even a good, pre-trained neural network, and you’ll be lucky if it even adjusts its model at all.

The flipside, of course, is that if you show a deep learning system several million pictures of Quokka, along with a few million pictures of every other extant mammal, you could well end up with a mammal identification system which can beat all but the top-performing experts at categorising small furry things.

“Deep learning requires very large quantities of data in order to build up a statistical picture,” says Imperial College’s Murray Shanahan. “It actually is very very slow indeed at learning, whereas a young child is very quickly going to learn the idea.”

Deep learning experts have proposed several ways to tackle the problem of data efficiency. Like much of the field, they’re best thought of through analogy with your own brain.

One such approach involves “progressive neural networks”. It aims to overcome the problem that many deep learning models have when they move into a new field: either they ignore their already-learned information and start afresh, or run the risk of “forgetting” what they already learned as it gets overwritten by the new information. Imagine if your options when learning to identify Quokkas were either to independently relearn the entire concept of heads, bodies, legs and fur, or to try and incorporate your existing knowledge but risk forgetting what a cat looks like.

Raia Hadsell is in charge of Deep Mind’s efforts to implement a better system for deep learning – one which is necessary if the company is to continue toward its long-term goal of building an artificial general intelligence: a machine capable of doing the same set of tasks as you or I.

“There is no model, no neural network, in the world that can be trained to both identify objects, play space invaders, and listen to music,” Hadsell said at Re-Work. “What we would like to be able to do is learn a task, get to [an] expert [level] at that task, and then most on to a second task. Then a third, then a forth then a fifth.

“We want to do that without forgetting. And with the ability to transfer from task to task: if I learn one task, I want that to help me learn the next task.” That’s what Hadsell’s team at Deep Mind has been working on. Their method allows the learning system to “freeze” what it knows about one task – say, playing Pong – and then move on to the next task, while still being able to refer back to what it learned about the first one.

“That could be an interesting low-level vision feature” – learning how to parse individual objects out of the stream of visual data, for instance – “or a high-level policy feature”, such as the knowledge that the small white dot must remain on the correct side of your paddle. It’s easy to see how the former is useful to carry over to other Atari games, while the latter might only be useful if you’re trying to train Breakout. But if you are trying to train Breakout, it lets you skip a whole chunk of learning.

Obviously Deep Mind is still a few steps away from actually using the technique to train an artificial general intelligence, which means they’re also a few steps away from accidentally unleashing a superintelligent AI on the world that will repurpose your brain into a node in a planet-wide supercomputer. But, Hadsell said, the progressive neural network technique does have some more immediate uses in improving data efficiency.

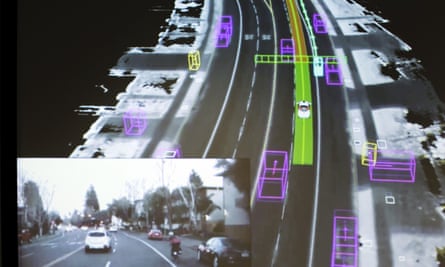

Take robotics. “Data is a problem for robots, because they break, they need minders, and they’re expensive,” she said. One approach is to use brute-force on the problem: take, for example, the 2m miles Alphabet’s self-driving cars have travelled in their attempt to learn how to drive. At the beginning, it was only safe to use on the freeway, and even then with a driver’s hand inches from the wheel. Now, it drives cars with no steering wheel at all – though not, yet, on public roads, for legal reasons.

Another approach is to teach the robot through simulation. Feed its sensors a rough approximation of the real world, and they’ll still learn mostly correctly: then you can “top up” the education with actual training. And the best way to do that is with progressive neural networks, she said.

Take one simple task: grabbing a floating ball using a robotic arm. “In a day, we trained this task robustly in simulation … if it had been done on a real robot it would have taken 55 days to train.” Hooked up to the real arm, just another two hours training was all it needed to get back to the same level of performance.

Teach them to think

Or there’s another approach. Imperial College’s Shanahan has been working in AI long enough to remember the first time it hit the hype cycle. Back then, the popular approach wasn’t deep learning, a method which has only become possible as processing power, storage space and, yes, data availability have all come of age. Instead, a popular approach was “symbolic” AI: focusing on building logical paradigms which could be generalised, and then fed information about the real world to teach them more. The “symbols” in symbolic AI are, Shanahan says, “a bit like sentences in English, that state facts about the world, or some domain.”

Unfortunately, that approach didn’t scale, and AI had a few years in a downturn. But Shanahan argues that there are benefits to a hybrid approach of the two. Not only would it help with the data efficiency problem, but it also helps with a related issue of transparency: “it’s very difficult to extract human-readable explanations for the decisions that they make,” he says. You can’t ask an AI why it decided that a Quokka was a Quokka; it just did.

Shanahan’s idea is to build up a symbolic style database not through hand-coding it, but by hooking it in with another approach, called deep reinforcement learning. That’s when an AI learns through trial and error, rather than by examining vast quantities of data. It was core to how DeepMind’s AlphaGo learned to play, for instance.

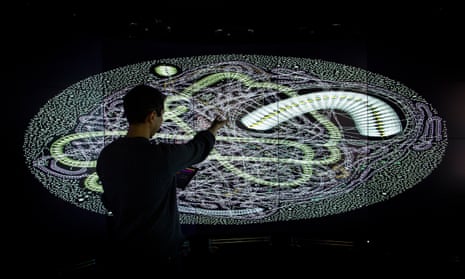

In a proof of concept, Shanahan’s team built an AI to play a simple game. In essence, the system is trained, not to play the game directly, but to teach a second system the rules of the game and the state of the world so that it can think in more abstract terms about what is going on.

Just like Hadsell’s approach, that pays off when the rules change slightly. Where a conventional deep learning system is flummoxed, Shanahan’s more abstracted system is able to think generally about the problem, see the similarities to the previous approach, and continue.

Think smart

To some extent, the data efficiency problem can be overstated. It’s true that you can learn something a heck of a lot faster than the typical deep learning system, for instance. But not only are you starting with years worth of previous knowledge that you’re building on – hardly a small amount of data – you also have a weakness that no good deep learning system would put up with: you forget. A lot.

That may turn out to be the cost of an efficient thinking system. Either you forget how to do stuff, or you spend ever increasing resources simply sorting between the myriad things you know trying to find the right one for each situation. But if that’s the price to pay for moving deep learning out of the research centres in the biggest internet companies, it could be worth it.